Downloads

Download

Download

This work is licensed under a Creative Commons Attribution 4.0 International License.

Survey/review study

From Emotion AI to Cognitive AI

Guoying Zhao *, Yante Li , and Qianru Xu

University of Oulu, Pentti Kaiteran Katu 1, Linnanmaa 90570, Finland

* Correspondence: guoying.zhao@oulu.fi

Received: 22 September 2022

Accepted: 28 November 2022

Published: 22 December 2022

Abstract: Cognitive computing is recognized as the next era of computing. In order to make hardware and software systems more human-like, emotion artificial intelligence (AI) and cognitive AI which simulate human intelligence are the core of real AI. The current boom of sentiment analysis and affective computing in computer science gives rise to the rapid development of emotion AI. However, the research of cognitive AI has just started in the past few years. In this visionary paper, we briefly review the current development in emotion AI, introduce the concept of cognitive AI, and propose the envisioned future of cognitive AI, which intends to let computers think, reason, and make decisions in similar ways that humans do. The important aspect of cognitive AI in terms of engagement, regulation, decision making, and discovery are further discussed. Finally, we propose important directions for constructing future cognitive AI, including data and knowledge mining, multi-modal AI explainability, hybrid AI, and potential ethical challenges.

Keywords:

emotion cognition human intelligence artificial intelligence1. Introduction

Intelligence is what makes us human [1]. Human intelligence is a mental attribute that consists of the ability to learn from experience, adapt to new conditions, manage complex abstract concepts, and interact with and change the environment using knowledge. It is the human intelligence that makes Humans different from other creatures.

Emotional and cognitive functions are inseparable from the human brain and they jointly form human intelligence [2, 3]. Emotions are biological states associated with the nervous system. They are brought on by neurophysiological changes associated with thoughts, feelings, behavioral responses, and pleasure or displeasure. Humans express their feelings or react to external and internal stimuli through emotion. Thus, emotion plays an important role in everyday life. In recent years, research on emotion has increased significantly in different fields such as psychology, neuroscience, and computer science, where the so-called emotion artificial intelligence (AI) is committed to developing systems to recognize, process, interpret and simulate human emotion, leading to various potential applications in human lives including E-teaching, home care, security, and so on.

Cognition refers to the mental action or process related to acquiring knowledge and understanding through thought, experience, and sense [4–7]. Through cognitive processing, we make decisions and produce appropriate responses. Research shows that cognition evolved hand-in-hand with emotion [2, 3]. In other words, the emotion and cognition are two sides of the same system, and separating them leads to anomalous behaviors. However, given the importance of cognition to human intelligence, research on cognitive AI is still very limited. To better understand human needs and advance the development of AI, it is crucial to build computational models with cognition functions to understand human thoughts and behaviors and import the cognition capability to machines for better interaction, communication, and cooperation in human-computer interactions or computer-assisted human-human interactions. In the future, modeling human cognition and reconstructing it with emotion in real-world application systems are the new objectives of AI [ 8].

By their nature, as both emotion AI and cognitive AI are inspired and built to imitate human intelligence, they share a lot in common and require the combined efforts of multiple disciplines. In this sense, the focus of this paper is to discuss the future cognitive AI, which makes computers capable of analyzing, reasoning, and making decisions like human users. We firstly introduce the relationship between the emotion and cognition. Then, the emotion AI and cognitive AI are introduced. The important aspects of cognitive AI are specifically discussed including engagement, regulation, decision making, and discovery. Finally, we share the opinions of research focus for future cognitive AI.

2. Emotion and Cognition

2.1. Emotion-Cognition Interactions

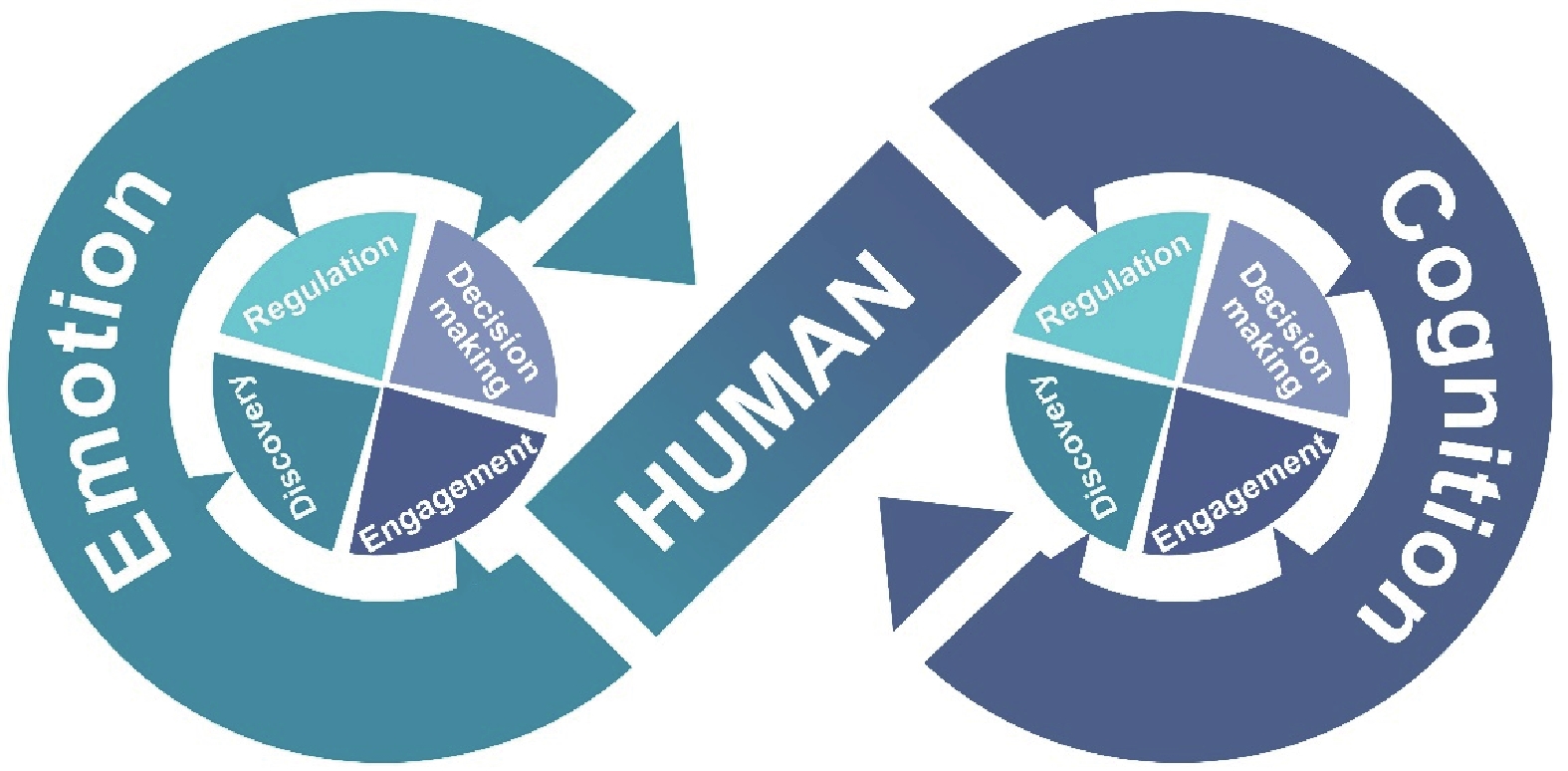

Cognition and emotion appear to be separate from each other, but actually, they are two sides of the same coin, as shown in Figure 1. In fact, for a long historical time dating back to the time of Plato, emotion and cognition have been considered as separate and independent processes [9, 10]. In recent years, however, more and more studies tend to consider emotion and cognition as interrelated and integrated [11]. Numerous psychological studies have found that the processing of salient emotional stimuli and the experience of affective states can have an impact on behavioral and cognitive processing, while in turn cognition can influence and regulate emotion [12, 13]. From a neurobiological perspective, in the human brain, although previous studies have tended to suggest that emotion is responsible for the subcortical processing such as the primitive limbic system, while cognitive-related processes are mostly processed by the cortex areas like the prefrontal cortex, substantial studies in recent years have shown that emotional and cognitive processes are interrelated with each other and shared a large number of overlapping regions [14, 15], for reviews, please see [12, 16]. Therefore, the study of the interaction between emotion and cognition can help us create more trustworthy and human-like AI and enhance human-computer interactions.

Figure 1. Emotion and cognition.

2.2. Emotion AI

Emotion plays an essential role in human communication and cognition. With emotions, humans can express their various feelings or react accordingly to internal and external stimuli. While not all AI or intelligent software systems need to be emotional or have exactly all human-like emotions such as depression and anxiety, using AI to further understand emotions and develop corresponding systems would certainly make AI more user-friendly and more convenient for our daily lives [17].

For this purpose, emotion AI is developed and dedicated to uncovering human emotions and enabling computers to have human-like capabilities to understand, interpret, and even express emotions [18]. Emotion AI can be used in a wide range of areas where emotions play an important role [17]. For instance, in mental health care, AI may help psychologists to detect symptoms or disorders that are hidden or unaware of by patients. In the education field, an AI teacher who can understand students’ emotional states and respond appropriately would enhance the learning process, and thus is utilized in remote or hybrid learning scenarios. Furthermore, emotion AI is coming into use in many other fields such as entertainment, communication, and intelligent agents [17]. To date, the novel methodology developed in affective computing and Emotion AI has significantly contributed to our lives in many areas such as emotional well-being, E-teaching, home care, and security.

Currently, there are three mainstreams in artificial emotional intelligence, namely emotion recognition, emotion synthesis, and emotion augmentation [19]. Emotion recognition refers to applying computer systems to recognize human emotions in affective computing, and is one of the most traditional approaches that has been employed in emotion AI research [20]. Emotion recognition has been widely used in different modalities such as facial expressions [21-23], body gestures [24, 25], acoustic or written linguistic content [26, 27], physiological signals [28, 29], and the fusion of multiple modalities [30–32]. While most previous research has focused on facial expression recognition, given the multi-modal nature of emotions, more and more researchers are tending to refer to other modalities as well.

With these multi-modal cues, AI can better recognize genuine and spontaneous emotions and build more reliable systems accordingly. Besides recognizing human emotions, AI often needs to analyze and process human behaviors and interact with humans, so it is important to equip AI with emotion synthesis capability [33] to improve the human-computer interactive experience. The main development of emotion synthesis is in speech, facial expression, and body behavior synthesis, which can even date back to three decades ago [19]. Exploring emotion synthesis, undoubtedly, can enhance the AI’s affective and social realism, thus improving the reliability of AI and making their interaction with users more natural [34]. However, it is important to note the emotion synthesis we raise here is far from an artificially emotional machine, as emotion synthesis is more about external emotional expressions, i. e., equipping AI with some human-like emotional reactions. There is still a long way to go to let AI generate inner emotions, which involves a lot of ethical issues that cannot be ignored.

In addition, emotion augmentation denotes embedding the principle of emotion in AI and using it in planning, reasoning, or more general goal achievement [19, 35]. In other words, emotion augmentation requires applying emotion concepts to achieve broader goals in AIs. For instance, by introducing two emotional parameters, namely anxiety and confidence, the emotional backpropagation (BP) learning algorithm can reach an outstanding performance compared to the conventional BP-based neural network in the facial recognition task [36]. Apart from that, emotion augmentation can be also used in a wider range of scenarios, such as designing models, computational reinforcement learning, or other cognitive-related tasks [35]. Although still far away, the booming development of studies in sentiment analysis and affective computing (in computer science and other interdisciplinary disciplines) makes the goal of translating emotions into AI less far-fetched than one might think.

2.3. Cognitive AI

Human thinking is beyond imagination. What human think about every day involves: how to act in a dynamic and evolving world; how to juggle multiple goals and preferences; how to face opportunities and threats, etc. Is it possible that a computer develops the ability to think and reason without human intervention? An overarching goal for future AI is to simulate human thought processes in a computerized model. The result is cognitive AI.

Cognitive AI is derived from AI and cognitive science [37]. It develops computer systems simulating human brain functions [38]. The aim of cognitive AI is to mimic human behaviors and solve complex problems. It encompasses the main brain behaviors of human intelligence, including perception, attention, thought, memory, knowledge formation, judgment and evaluation, problem-solving and decision making, etc [39]. Moreover, cognitive processes utilize existing knowledge to discover new knowledge.

By its nature, cognitive AI needs to employ methods from multiple disciplines such as cognitive science, psychology, linguistics, physics, information theory, and mathematics [40, 41]. Based on the knowledge from different disciplines, cognitive AI uses computer theory and techniques to simulate human cognitive tasks in order to make computers think like the human brain [41]. Cognitive AI is fundamentally different from traditional AI. Traditional AI, or traditional computer techniques, are mainly based on the fed data and processed accordingly under human pre-programming [42]. On the contrary, cognitive AI should be able to continuously adapt itself to the context and environment so as to evolve and grow over time. For example, IBM Watson, a typical representative of Cognitive AI, defeated the human champion in the Jeopardy game in 2011 [43]. Unlike its forerunner Deep Blue (known as the first computer that defeated a human chess player), which required an exhaustive search and performed merely quantitative analysis, Watson relies on offline stored information and uses natural language processing combined with appropriate contextual information to perform adaptive reasoning and learning to produce answers [41, 43].

In this sense, the benefits of cognitive AI outweigh traditional AI, as traditional AI prefers to execute optimal algorithms to solve problems, while cognitive AI goes a big step further to replicate human wisdom and intelligence through multifaceted analysis including understanding and modeling human engagement, regulation (self-regulation and shared regulation in interactions), decision making, discovery, etc. Notice that cognitive AI requires complex data learning, knowledge mining, multi-model explainability, and hybrid AI to learn and analyze the patterns and to ensure that the reasoning results are reliable and consistent [44].

3. Important Aspects in Cognitive AI

In this section, we specifically discuss the important aspects of developing a successful cognitive AI system, including engagement, regulation, decision making, and discovery.

3.1. Engagement

Engagement describes the effortful commitment to goals [45]. Cognition, emotion, and motivation are incorporated into the engagement. Multiple studies show that positive emotion and motivation can improve the engagement of humans [46]. These findings have been successfully utilized in various fields, such as education, business dealing and human-computer interaction (HCI) to improve the engagement of users [47–49].

In recent years, besides emotion and motivation, researchers start to study the contribution of cognitive AI to engagement. IBM developed Watson taking advantage of cognitive AI for customer engagement to better identify the wants and needs of the customers [50]. In healthcare, cognitive computing systems collect individual health data from a variety of sources to enhance patient engagement and help professionals treat patients in a customized manner [51]. However, the technology is still at an early stage. How to find what really matters in engaging humans requires further study.

3.2. Regulation

In social cognitive theory, human behavior is motivated and regulated through constant self-influence. The self- regulative mechanism has a strong impact on affect, thought, motivation, and human action [52]. Regulation involves the ability to make adaptive changes in terms of cognition, motivation, and emotions in challenging situations [53]. Emotion changes could be the reason for the regulation, while they also could be the result of regulation [54].

In Bandura’s theory [55], the regulation process contains self-observation, judgment, and self-response. The self-observation is how humans look at themselves and their behaviors. Judgment refers to using a standard to compare what humans see. Self-response is performing different behaviors according to whether meeting the standard or not. Through these steps, humans are able to control their behavior [52]. However, until now, it is almost impossible to evidence the "invisible mental" shared regulation due to methodological limitations [56]. Moreover, it is not clear how humans regulate their internal states in response to internal and external stressors [52]. In the future, the self- and shared-regulative mechanisms should be further investigated and integrated into the AI systems to make AI learn by itself like humans.

3.3. Decision Making

Decision making is regarded as the cognitive process referring to problem-solving activities yielding a solution deemed to be optimal among many alternatives [57]. The decision making process can be based on explicit or tacit knowledge and beliefs. It can be rational or irrational.

Decision making happens every now and then in human’s daily life. A wise decision may make life and the world better. However, human’s decisions may be influenced by multiple factors, especially emotion. Human choices can be influenced by the human’s mood at the time of decision making [58, 59]. For instance, happy people are more likely to be optimistic about the current situation and choose to maintain the current state, whereas sad people are more inclined to be pessimistic about the current situation and choose to change the current state. Fearful people tend to hold pessimistic judgments about future events, while angry people tend to keep optimistic judgments about future events. It is important to study how to model the connections between emotion and decision making and understand the deep process of decision making in human brains.

3.4. Discovery

As a high-level and advanced scope of cognitive AI, discovery refers to finding insights and connections in the vast amount of information and developing skills to extract new knowledge [60]. With the increasing volumes of data, it becomes possible to train models with AI technologies to efficiently discover useful information from massive data. At the same time, the massive complex data increases the difficulty of manually processing by humans. Therefore, AI systems are needed to help exploit information more effectively than humans could do on their own.

In recent years, some systems with discovery capabilities have already emerged. The cognitive information management shell at Louisiana State University is a cognitive solution and has been applied in preventing future oil spills [61]. More specifically, this system built a complex event processing system that can detect problems in mission-critical and generate intelligent decisions to modulate the system environment. The system contains distributed intelligent agents to collect multiple streaming data to build interactive sensing, inspection, and visualization systems. It is able to achieve real-time monitoring and analysis. Through archiving past events and cross-referencing them with current events, the system can discover deeply hidden patterns and then make decisions upon them. Specifically, cognitive information management not only sends alerts but also dynamically re-configures to isolate critical events and repair failures. Currently, the study of system with discovery capabilities are still in the early stages. The value propositions for the future are very compelling. In the future, we should consider more about how to design cognitive systems with discovery ability effectively and efficiently so as to solve different tasks in complex real-life situations.

In general, engagement, self-regulation, decision making, and discovery are considered to be complementary in cognitive AI systems, as shown in Figure 2. Engagement refers to the way how humans and systems interact with each other. Through enhancing the engagement, more effort would be contributed to achieving the final goal. In this process, adaptions referring to regulation are ongoing which further simulates the engagement [62]. Furthermore, engagement and regulation can influence the ability to explore the underlying complex relationships and solve problems that are related to discovery and decision-making, relatively [63]. In turn, just like us human beings, discovery and decision making can also impact engagement and regulation. To develop a successful cognitive AI system, all of the above four aspects should be considered and well-designed.

Figure 2. Four aspects of cognitive AI are complementary to each other.

4. The Future of Cognitive AI

Towards cognitive AI, data and knowledge mining, multi-modal AI explainability, and hybrid AI should be explored, and the potential new ethical issues should also be kept in mind.

4.1. Data and Knowledge Mining

Fueled by advances in AI and the boosting of digital data, the cognitive process could be increasingly delegated to automated processes based on data analysis and knowledge mining [64], which intends to dig hidden patterns and knowledge from vast amounts of information through AI technology [65]. The new AI systems should support analytical engagement, regulations, decision making, and discovery approaches, which involve big data of greater variety, higher volumes and more velocity with the technology development [66]. Most of the current machine learning techniques based on big data are usually based on the vector space and work for specific scenarios [67–69]. It is difficult to store, analyze, and visualize big and complex cognitive data for further processes or results with traditional statistical models. A promising future research focus is to learn from dynamic data of complicated cognitive processes in both Euclidean space and non-Euclidean space with uncertainties for generic scenarios and scenarios with equivocality [70]. Another research direction would be the life-long learning and self-supervised learning that discover new knowledge through digging into the relationships among the data with complex structures and uncertainties. For example, in the field of cybersecurity where dynamic threats and cyber-attacks appear all the time [71], cybersecurity is required to build fast self-reliant cognitive computing systems that can make wiser security decisions based on self-learned knowledge and complex surrounding factors.

4.2. Multi-Modal AI Explainability

Cognitive systems are dedicated to developing advanced human-like AIs. However, as AI becomes more intelligent and impacts our lives in many ways, an insurmountable problem is how we trace back and understand the results of AI algorithms.

Explainable artificial intelligence (XAI) refers to a type of explainable AI that generate details and reasoning processing to help users understand its functions [72]. In terms of cognitive AI, it is often necessary to collect information from different modalities. For example, in building emotion and cognitive AI engines, behavioral information such as facial expressions and body gestures, language and voice information, as well as physiological signals are all important data sources for understanding human emotion and cognition functions, and are collected simultaneously across modalities. However, once we feed all data into the computer, the whole calculation process is seems to be a “black box”, and even the researchers or engineers who develop the algorithms themselves cannot know what happens inside the “box” [72, 73]. Therefore, developing cognitive XAI to help us interpret the results, reveal the reasoning processing, and find causality among multi-modal data variables would be a fruitful area for further work. The study of multi-modal AI explainability could have various applications, especially in health care. On one hand, the cognitive XAI could help doctors better analyze diseases and reduce the rate of misdiagnosis [42, 74]. On the other hand, the patient can better understand their health status which leads to increased trust in treatment options.

4.3. Hybrid AI

Hybrid AI is the future direction, which represents joint intelligence from human beings and algorithms. To date, hybrid AI has gained much more attention and started to be applied to some realistic applications, such as management [75]. However, most of them are simple combinations of human and AI decisions. The way to better take advantage of humans and algorithms should be explored. Moreover, in daily life, efficient interactions and collaborations along with optimal decisions require contributions from multiple people/systems who/which have various strengths. It is valuable to study how to combine the decision of a group of people/systems. In general, when addressing complexity, AI can extend humans’ cognition, while humans offer an intuitive, generic, and creative approach to deal with uncertainties and equivocality. Therefore, hybrid intelligence can strengthen both human intelligence and AI, and get both co-evolving by integrating cognitive AI into the development. The hybrid cognitive AI benefits high-risk situations, such as medical diagnostics, algorithmic trading, and autonomous driving [76].

4.4. Ethical Concerns

If AI algorithms/systems have more emotional and cognitive intelligence, they would be more human-like and play a more important role in various applications, but more ethical concerns might be introduced, which cannot be overlooked in constructing future cognitive computing systems.

AI is usually driven by big data, especially nowadays many researchers emphasize the use of multi-modal data to improve the accuracy of algorithms. However, a large amount of data, especially from the same individual, will also introduce privacy and potential misuse issues. There is also a growing concern about the risks of false interpretation of emotions and false cognitive actions due to inaccurate and unreliable AI algorithms. AI may reveal emotional or cognitive states that people do not want to disclose or be treated unfairly due to the bias of the AI’s decisions. Therefore, it is important to consider the ethical issues and comply with the corresponding principles, e.g., transparency, justice, fairness and equity, non-maleficence, responsibility, and privacy. For a detailed guideline, please see [77] while improving the performance of AI.

5. Conclusion

Emotion AI and cognitive AI simulate human intelligence together. Emotion AI is dedicated to discovering human emotions and enabling computers to have human-like capabilities to understand, interpret, and synthesize emotions. Cognitive AI aims to make computers analyze, reason, and make decisions like a human. In this paper, we review the development and relationship of emotion AI and cognitive AI. Cognitive computing is recognized as the next era of computing. Four important aspects of cognitive AI are discussed including engagement, regulation, decision making, and discovery, which are complementary in cognitive AI. To develop a successful cognitive computing system, all of the above four aspects should be considered. Finally, this paper explores the future of cognitive AI. Possible research directions on future cognitive AI include data and knowledge mining, multi-modal AI explainability, hybrid AI, and potential ethical issues.

Author Contributions: Guoying Zhao, Yante Li and Qianru Xu: conceptualization; Yante Li and Qianru Xu: investigation; Yante Li and Qianru Xu: writing — original draft preparation; Guoying Zhao: writing — review and editing; Guoying Zhao: supervision; Guoying Zhao: project administration; Guoying Zhao: funding acquisition. All authors have read and agreed to the published version of the manuscript.

Funding: This research is funded by the Academy of Finland for Academy Professor project Emotion AI, grant Nos 336116 and 345122, and Ministry of Education and Culture of Finland for AI forum project.

Conflicts of Interest: The authors declare no conflict of interest.

Acknowledgments: Thanks Guoying Zhao for figure design.

References

- Luckin, R.

Machine Learning and Human Intelligence: The Future of Education for the 21st Century ; UCL IOE Press, London, 27, July,2018 . doi:10.1177/14782103221117655 DOI: https://doi.org/10.1177/14782103221117655 - Phelps, E.A, Emotion AND cognition: Insights from studies of the human amygdala. Annu. Rev. Psychol., 2006, 57: 27−53. DOI: https://doi.org/10.1146/annurev.psych.56.091103.070234

- Isaacowitz, D.M.; Charles, S.T.; Carstensen, L.L. Emotion and cognition. In

The Handbook of Aging and Cognition ; Salthouse, T.A., Ed.; Lawrence Erlbaum Associates Inc.: Mahwah,2000 ; pp. 593–631. - Jessica A.Sommerville. Social Cognition. Encyclopedia of Infant and Early Childhood Development,

2020 , pp.196-206, Elsevier. doi: 10.1016/B978-0-12-809324-5.21640-4. DOI: https://doi.org/10.1016/B978-0-12-809324-5.21640-4 - Jian, M.W.; Zhang, W.Y.; Yu, H.; et al, Saliency detection based on directional patches extraction and principal local color contrast. J. Vis. Commun. Image Represent., 2018, 57: 1−11. DOI: https://doi.org/10.1016/j.jvcir.2018.10.008

- Jian, M.W., Qi, Q., Dong, J.Y.; et al, Integrating QDWD with pattern distinctness and local contrast for underwater saliency detection. J. Vis. Commun. Image Represent., 2018, 53: 31−41. DOI: https://doi.org/10.1016/j.jvcir.2018.03.008

- Jian, M.W.; Lam, K.M.; Dong, J.Y.; et al, Visual-patch-attention-aware saliency detection. IEEE Trans. Cybern., 2015, 45: 1575−1586. DOI: https://doi.org/10.1109/TCYB.2014.2356200

- Schmid, U, Cognition and AI. Fachbereich 1 Künstliche Intelligenz der Gesellschaft für Informatik e.V., GI., 2008, 1: 5.

- Scherer, K.R. On the nature and function of emotion: A component process approach. In

Approaches to Emotion ; Scherer, K.R.; Ekman, P., Eds.; Psychology Press: New York,1984 ; p. 26. - Lazarus, R.S. The cognition-emotion debate: A bit of history. In

Handbook of Cognition and Emotion ; Dalgleish, T.; Power, M.J., Eds.; John Wiley & Sons: New York,1999 ; pp. 3–19. DOI: https://doi.org/10.1002/0470013494.ch1 - Pessoa, L.

The Cognitive-Emotional Brain: From Interactions to Integration ; MIT Press: Cambridge, 2013. DOI: https://doi.org/10.7551/mitpress/9780262019569.001.0001 - Okon-Singer, H.; Hendler, T.; Pessoa, L.; et al, The neurobiology of emotion–cognition interactions: Fundamental questions and strategies for future research. Front. Hum. Neurosci., 2015, 9: 58. DOI: https://doi.org/10.3389/fnhum.2015.00058

- Dolcos, F.; Katsumi, Y.; Moore, M.; et al, Neural correlates of emotion-attention interactions: From perception, learning, and memory to social cognition, individual differences, and training interventions. Neurosci. Biobehav. Rev., 2020, 108: 559−601. DOI: https://doi.org/10.1016/j.neubiorev.2019.08.017

- Shackman, A.J.; Salomons, T.V.; Slagter, H.A.; et al, The integration of negative affect, pain and cognitive control in the cingulate cortex. Nat. Rev. Neurosci., 2011, 12: 154−167. DOI: https://doi.org/10.1038/nrn2994

- Young, M.P.; Scanneil, J.W.; Burns, G.A.P.C.; et al, Analysis of connectivity: Neural systems in the cerebral cortex. Rev. Neurosci., 1994, 5: 227−250. DOI: https://doi.org/10.1515/REVNEURO.1994.5.3.227

- Pessoa, L, On the relationship between emotion and cognition. Nat. Rev. Neurosci., 2008, 9: 148−158. DOI: https://doi.org/10.1038/nrn2317

- Martı́nez-Miranda, J.; Aldea, A, Emotions in human and artificial intelligence. Comput. Hum. Behav., 2005, 21: 323−341. DOI: https://doi.org/10.1016/j.chb.2004.02.010

- Tao, J.H.; Tan, T.N. Affective computing: A review. In

Proceedings of the 1st International Conference on Affective Computing and Intelligent Interaction, Beijing, China, October 22–24, ; Springer: Beijing, China, 2005; pp. 981–995. doi:10.1007/11573548_125 DOI: https://doi.org/10.1007/11573548_1252005 - Schuller, D.; Schuller, B.W, The age of artificial emotional intelligence. Computer, 2018, 51: 38−46. DOI: https://doi.org/10.1109/MC.2018.3620963

- Saxena, A.; Khanna, A.; Gupta, D, Emotion recognition and detection methods: A comprehensive survey. J. Artif. Intell. Syst., 2020, 2: 53−79. DOI: https://doi.org/10.33969/AIS.2020.21005

- Li, Y.T.; Wei, J.S.; Liu, Y.; et al. 2022, Deep learning for micro-expression recognition: A survey. IEEE Trans. Affect. Comput., 2022, 13: 2028−2046. DOI: https://doi.org/10.1109/TAFFC.2022.3205170

- Liu, Y.; Zhou, J.Z.; Li, X.; et al, Graph-based Facial Affect Analysis: A Review. IEEE Trans. Affect. Comput., 2022, 19: 1−20. DOI: https://doi.org/10.1109/TAFFC.2022.3215918

- Liu, Y.; Zhang, X.M.; Zhou, J.Z.; et al, SG-DSN: A Semantic Graph-based Dual-Stream Network for facial expression recognition. Neurocomputing, 2021, 462: 320−330. DOI: https://doi.org/10.1016/j.neucom.2021.07.017

- Chen, H.Y.; Liu, X.; Li, X.B.; et al. Analyze spontaneous gestures for emotional stress state recognition: A micro-gesture dataset and analysis with deep learning. In

Proceedings of 2019 14th IEEE International Conference on Automatic Face & Gesture Recognition (FG 2019), Lille, France, 14–18 May 2019 ; IEEE: Lille, France,2019 ; pp. 1–8. doi:10.1109/FG.2019.8756513 DOI: https://doi.org/10.1109/FG.2019.8756513 - Liu, X.; Shi, H.L.; Chen, H.Y.; et al. iMiGUE: An identity-free video dataset for micro-gesture understanding and emotion analysis. In

Proceedings of 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021 ; IEEE: Nashville, USA,2021 ; pp. 10631–10642. doi:10.1109/CVPR46437.2021.01049 DOI: https://doi.org/10.1109/CVPR46437.2021.01049 - Koolagudi, S.G.; Rao, K.S, Emotion recognition from speech: A review. Int. J. Speech Technol., 2012, 15: 99−117. DOI: https://doi.org/10.1007/s10772-011-9125-1

- Zhou, Z.H.; Zhao, G.Y.; Hong, X.P.; et al, A review of recent advances in visual speech decoding. Image Vis. Comput., 2014, 32: 590−605. DOI: https://doi.org/10.1016/j.imavis.2014.06.004

- Yu, Z.T.; Li, X.B.; Zhao, G.Y, Facial-Video-Based Physiological Signal Measurement: Recent advances and affective applications. IEEE Signal Process. Mag., 2021, 38: 50−58. DOI: https://doi.org/10.1109/MSP.2021.3106285

- Shu, L.; Xie, J.Y.; Yang, M.Y.; et al, A review of emotion recognition using physiological signals. Sensors, 2018, 18: 2074. DOI: https://doi.org/10.3390/s18072074

- Li, X.B.; Cheng, S.Y.; Li, Y.T.; et al. 4DME: A spontaneous 4D micro-expression dataset with multimodalities.

IEEE Trans. Affect. Comput .2022 , in press. doi:10.1109/TAFFC.2022.3182342 DOI: https://doi.org/10.1109/TAFFC.2022.3182342 - Huang, X.H.; Kortelainen, J.; Zhao, G.Y.; et al, Multi-modal emotion analysis from facial expressions and electroencephalogram. Comput. Vis. Image Underst., 2016, 147: 114−124. DOI: https://doi.org/10.1016/j.cviu.2015.09.015

- Saleem, S.M.; Abdullah, A.; Ameen, S.Y.A.; et al, Multimodal emotion recognition using deep learning. J. Appl. Sci. Technol. Trends, 2021, 2: 52−58. DOI: https://doi.org/10.38094/jastt20291

- Lisetti, C.L. Emotion synthesis: Some research directions. In

Proceedings of the Working Notes of the AAAI Fall Symposium Series on Emotional and Intelligent: The Tangled Knot of Cognition, Orlando, FL, USA, October 22–24, ; AAAI Press: Menlo Park, USA, 1998; pp. 109–115.1998 - Hudlicka, E, Guidelines for designing computational models of emotions. Int. J. Synth. Emotions (IJSE), 2011, 2: 26−79. DOI: https://doi.org/10.4018/jse.2011010103

- Strömfelt, H.; Zhang, Y.; Schuller, B.W. 2017. Emotion-augmented machine learning: Overview of an emerging domain. In

Proceedings of 2017 Seventh International Conference on Affective Computing and Intelligent Interaction (ACII), San Antonio, TX, USA, 23–26 October 2017 ; IEEE: San Antonio, USA,2017 ; pp. 305–312. doi:10.1109/ACII.2017.8273617 DOI: https://doi.org/10.1109/ACII.2017.8273617 - Khashman, A. A modified backpropagation learning algorithm with added emotional coefficients, IEEE Trans. Neural Netw., 2008, 19: 1896−1909. DOI: https://doi.org/10.1109/TNN.2008.2002913

- Hwang, K.; Chen, M. Big-Data Analytics for Cloud,

IoT and Cognitive Computing ; John Wiley & Sons: Hoboken, NJ, USA,2017 . - Wang, Y.X, A cognitive informatics reference model of autonomous agent systems (AAS). Int. J. Cognit. Inf. Nat. Intell., 2009, 3: 1−16. DOI: https://doi.org/10.4018/jcini.2009010101

- Li, J.H.; Mei, C.L.; Xu, W.H.; et al. Concept learning via granular computing: A cognitive viewpoint.

Inf. Sci . 2015,298 , 447–467. doi:10.1016/j.ins.2014.12.010 DOI: https://doi.org/10.1016/j.ins.2014.12.010 - Chen, M.; Herrera, F.; Hwang, K, Cognitive computing: Architecture, technologies and intelligent applications. IEEE Access, 2018, 6: 19774−19783. DOI: https://doi.org/10.1109/ACCESS.2018.2791469

- Gudivada, V.N, Cognitive computing: Concepts, architectures, systems, and applications. Handb. Stat., 2016, 35: 3−38. DOI: https://doi.org/10.1016/bs.host.2016.07.004

- Sreedevi, A.G.; Harshitha, T.N.; Sugumaran, V.; et al. Application of cognitive computing in healthcare, cybersecurity, big data and IoT: A literature review.

Inf. Process. Manage . 2022,59 , 102888. doi:10.1016/J.IPM.2022.102888 DOI: https://doi.org/10.1016/j.ipm.2022.102888 - Ferrucci, D.A, Introduction to “This is Watson”. IBM J. Res. Dev., 2012, 56: 1.1−1.5. DOI: https://doi.org/10.1147/JRD.2012.2194011

- Hurwitz, J.S.; Kaufman, M.; Bowles, A.

Cognitive Computing and Big Data Analytics ; John Wiley & Sons: Indianapolis, IN, USA,2015 . - Fairclough, S.H.; Venables, L, Prediction of subjective states from psychophysiology: A multivariate approach. Biol. Psychol., 2006, 71: 100−110. DOI: https://doi.org/10.1016/j.biopsycho.2005.03.007

- Teixeira, T.; Wedel, M.; Pieters, R, Emotion-induced engagement in internet video advertisements. J. Mark. Res., 2012, 49: 144−159. DOI: https://doi.org/10.1509/jmr.10.0207

- Skinner, E.; Pitzer, J.; Brule, H. The role of emotion in engagement, coping, and the development of motivational resilience. In

International Handbook of Emotions in Education ; Pekrun, R.; Linnenbrink-Garcia, L., Eds.; Routledge: New York,2014 ; pp. 331–347. - Mathur, A.; Lane, N.D.; Kawsar, F. Engagement-aware computing: Modelling user engagement from mobile contexts. In

Proceedings of the 2016 ACM International Joint Conference on Pervasive and Ubiquitous Computing, Heidelberg, Germany, 12–16 September 2016 ; ACM: Heidelberg, Germany,2016 ; pp. 622–633. doi:10.1145/2971648.2971760 DOI: https://doi.org/10.1145/2971648.2971760 - Renninger, K.A.; Hidi, S.E.

The Power of Interest for Motivation and Engagement ; Routledge: New York,2015 . DOI: https://doi.org/10.4324/9781315771045 - Klie, L, IBM’s Watson brings cognitive computing to customer engagement. Speech Technol. Mag., 2014, 19: 38−42.

- Behera, R.K.; Bala, P.K.; Dhir, A, The emerging role of cognitive computing in healthcare: A systematic literature review. Int. J. Med. Inf., 2019, 129: 154−166. DOI: https://doi.org/10.1016/j.ijmedinf.2019.04.024

- Bandura, A, Social cognitive theory of self-regulation. Organ. Behav. Hum. Decis. Process., 1991, 50: 248−287. DOI: https://doi.org/10.1016/0749-5978(91)90022-L

- Schunk, D.H.; Greene, J.A. Historical, contemporary, and future perspectives on self-regulated learning and performance. In

Handbook of Self-Regulation of Learning and Performanc e; Schunk, D.H.; Greene, J.A., Eds.; Routledge: New York,2018 ; pp. 1–15. doi:10.4324/9781315697048-1 DOI: https://doi.org/10.4324/9781315697048-1 - McRae, K.; Gross, J.J, Emotion regulation. Emotion, 2020, 20: 1−9. DOI: https://doi.org/10.1037/emo0000703

- Bandura, A.

Social Foundations of Thought and Action ; Prentice Hall, New York,2002 . Available online: https://psycnet.apa.org/record/1985-98423-000(accessed on 5 November 2022). - Azevedo, R.; Gašević, D, Analyzing multimodal multichannel data about self-regulated learning with advanced learning technologies: Issues and challenges. Comput. Hum. Behav., 2019, 96: 207−210. DOI: https://doi.org/10.1016/j.chb.2019.03.025

- Wilson, R.A.; Keil, F.C.

The MIT Encyclopedia of the Cognitive Sciences ; A Bradford Book, West Yorkshire, UK, 2001. - Forgas, J.P, Mood effects on decision making strategies. Aust. J. Psychol., 1989, 41: 197−214. DOI: https://doi.org/10.1080/00049538908260083

- Loewenstein, G.; Lerner, J.S. The role of affect in decision making. In

Handbook of Affective Science ; Davidson, R.; Goldsmith, H.; Scherer, K., Eds.; Oxford University Press: Oxford,2003 ; pp. 619–642. DOI: https://doi.org/10.1093/oso/9780195126013.003.0031 - Gliozzo, A.; Ackerson, C.; Bhattacharya, R.; et al.

Building Cognitive Applications with IBM Watson Services: Volume 1 Getting Started ; IBM Redbooks, IBM Garage, United States,2017 . Available online:https://www.redbooks.ibm.com/abstracts/sg248387.html (accessed on 6 November 2022). - Iyengar, S.S.; Mukhopadhyay, S.; Steinmuller, C.; et al, Preventing future oil spills with software-based event detection. Computer, 2010, 43: 95−97. DOI: https://doi.org/10.1109/MC.2010.235

- Commissiong, M.A. Student Engagement, Self-Regulation, Satisfaction, and Success in Online Learning Environments. Ph.D. Thesis, Walden University, Minneapolis, USA,

2020 . - Starkey, K.; Hatchuel, A.; Tempest, S, Management research and the new logics of discovery and engagement. J. Manage. Stud., 2009, 46: 547−558. DOI: https://doi.org/10.1111/j.1467-6486.2009.00833.x

- Araujo, T.; Helberger, N.; Kruikemeier, S.; et al, In AI we trust? Perceptions about automated decision-making by artificial intelligence AI Soc., 2020, 35: 611−623. DOI: https://doi.org/10.1007/s00146-019-00931-w

- Chirapurath, J.

Knowledge Mining: The Next Wave of Artificial Intelligence-Led Transformation ; Harward Business Review, Harvard Business Publishing, Watertown, Massachusetts, 21.11.2019 . Available online:https://hbr.org/sponsored/2019/11/knowledge-mining-the-next-wave-of-artificial-intelligence-led-transformation (accessed on 11 November 2022). - Sagiroglu, S.; Sinanc, D. Big data: A review. In

Proceedings of 2013 International Conference on Collaboration Technologies and Systems (CTS), San Diego, CA, USA, 20–24 May 2013 ; IEEE: San Diego, USA,2013 ; pp. 42–47. doi:10.1109/CTS.2013.6567202 DOI: https://doi.org/10.1109/CTS.2013.6567202 - Sarker, I.H, Machine learning: Algorithms, real-world applications and research directions. SN Comput. Sci., 2021, 2: 160. DOI: https://doi.org/10.1007/s42979-021-00592-x

- Peng, W.; Varanka, T.; Mostafa, A.; et al, Hyperbolic deep neural networks: A survey. IEEE Trans. Pattern Anal. Mach. Intell., 2022, 44: 10023−10044. DOI: https://doi.org/10.1109/TPAMI.2021.3136921

- Li, Z.M.; Tian, W.W.; Li, Y.T.; et al. A more effective method for image representation: Topic model based on latent dirichlet allocation. In

Proceedings of 2015 14th International Conference on Computer-Aided Design and Computer Graphics (CAD/Graphics), Xi'an, China, 26–28 August 2015 ; IEEE: Xi'an, China,2015 ; pp. 143–148. doi:10.1109/CADGRAPHICS.2015.19 DOI: https://doi.org/10.1109/CADGRAPHICS.2015.19 - Jarrahi, M.H, Artificial intelligence and the future of work: Human-AI symbiosis in organizational decision making. Bus. Horiz., 2018, 61: 577−586. DOI: https://doi.org/10.1016/j.bushor.2018.03.007

- Wyant, D.K.; Bingi, P.; Knight, J.R.; et al. DeTER framework: A novel paradigm for addressing cybersecurity concerns in mobile healthcare. In

Research Anthology on Securing Medical Systems and Records ; Information Resources Management Association, Ed.; IGI Global, Chocolate Ave. Hershey, PA 17033, USA,2022 ; pp. 381–407. doi:10.4018/978-1-6684-6311-6.ch019 DOI: https://doi.org/10.4018/978-1-6684-6311-6.ch019 - Arrieta, A.B.; Díaz-Rodríguez, N.; Del Ser, J.; et al, Explainable Artificial Intelligence (XAI): Concepts, taxonomies, opportunities and challenges toward responsible AI. Inf. Fusion, 2020, 58: 82−115. DOI: https://doi.org/10.1016/j.inffus.2019.12.012

- Holzinger, A, Explainable AI and multi-modal causability in medicine. i-com, 2021, 19: 171−179. DOI: https://doi.org/10.1515/icom-2020-0024

- Ravindran, N.J.; Gopalakrishnan, P. Predictive analysis for healthcare sector using big data technology. In

Proceedings of 2018 Second International Conference on Green Computing and Internet of Things (ICGCIoT), Bangalore, India ,16–18 August 2018; IEEE: Bangalore, India,2018 ; pp. 326–331. doi:10.1109/ICGCIoT.2018.8753090 DOI: https://doi.org/10.1109/ICGCIoT.2018.8753090 - Lin, S.J.; Hsu, M.F. Incorporated risk metrics and hybrid AI techniques for risk management.

Neural Comput . Appl.2017 ,28 , 3477–3489. doi:10.1007/s00521-016-2253-4 DOI: https://doi.org/10.1007/s00521-016-2253-4 - Peeters, M.M.M.; van Diggelen, J.; van den Bosch, K.; et al, Hybrid collective intelligence in a human–AI society. AI Soc., 2021, 36: 217−238. DOI: https://doi.org/10.1007/s00146-020-01005-y

- Jobin, A.; Ienca, M.; Vayena, E, The global landscape of AI ethics guidelines. Nat. Mach. Intell., 2019, 1: 389−399. DOI: https://doi.org/10.1038/s42256-019-0088-2